The Bottleneck That Wasn't

Here's the punchline already at the start: If you say you addressed a bottleneck in the system (coding), and then you claim it moved to some other place (e.g. reviews) without having observed a huge productivity increase of the whole system in between, it wasn't where you initially thought it was.

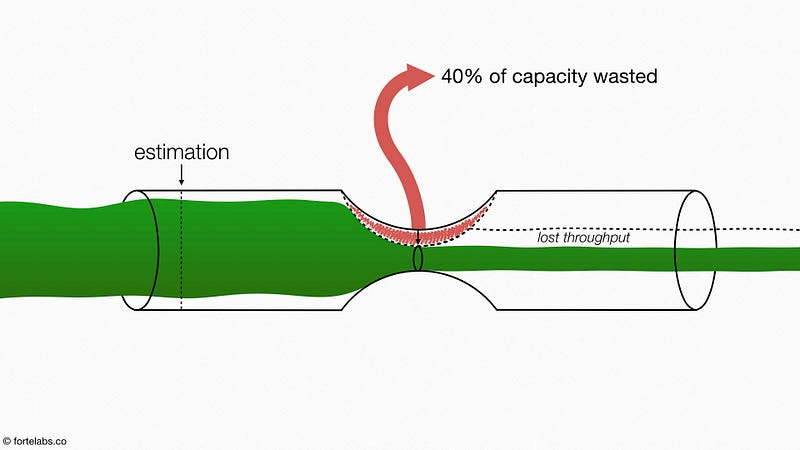

And you're most likely making things even worse without knowing, by piling up even more inventory in the system and choking its throughput.

This whole thing with AI and claims that it increases productivity reminds me of this recording of boarding a Chinese bus:

More activity in front of the bottleneck (the width of the bus door) by non-constraints (people boarding) is wasting throughput of the whole system (number of people boarding the bus in a unit of time).

If you address a bottleneck - by Theory of Constraints, it's the most constrained part of the system - by definition you must observe huge increase in throughput of the system, i.e. productivity.

Here's another way to put it by Steve Smith:

All of this reminds me of the story from the early chapters of The Goal. Here’s the gist:

Alex Rogo’s plant had installed expensive NCX-10 robots, and management was proud of them — even the head of the company came for a photo opportunity. Alex bumps into his old physics professor Jonah at an airport, mentions the robots improved efficiency by 36%, and Jonah asks three simple questions:

Did you sell any more products?

Did inventories go down?

The answers were no, and no (inventory actually went up). Jonah then says: “Then you didn’t really increase productivity.”

The robots had no impact on sales, and they increased inventory (decreased throughput). Because the robots were so expensive, the company pressured the factory to run them at 100% utilization, even when there was no demand for the parts they produced. The result was a huge increase in half-finished inventory.

Since operating costs stayed the same and throughput did not increase, the profits of the plant actually decreased as a result of adding the robots.

Systems Thinking takeaway: Systems often deteriorate not IN SPITE, but BECAUSE of our efforts to improve them.

So, you really need to be sure you know what you’re doing.